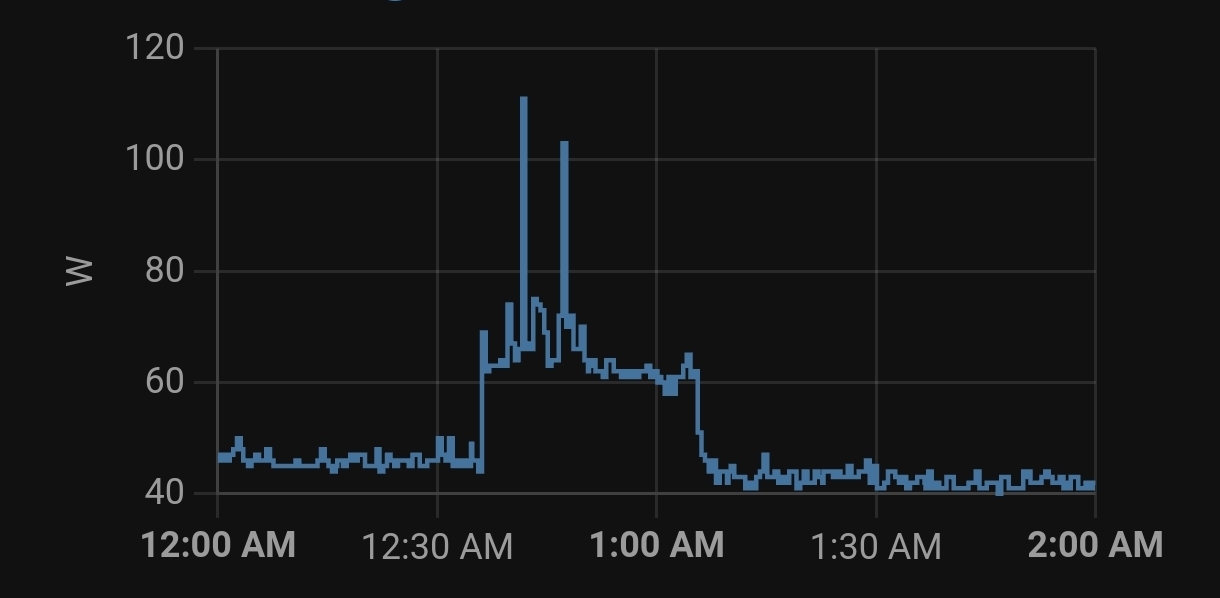

For comparison, I run a thinkstation p300 with i7-4790 (TDP 84W) 24/7 and the power usage looks like this:

Even when idling this old processor still guzzles 45W. Certainly not as nice as GP’s that only use 10W during idle.

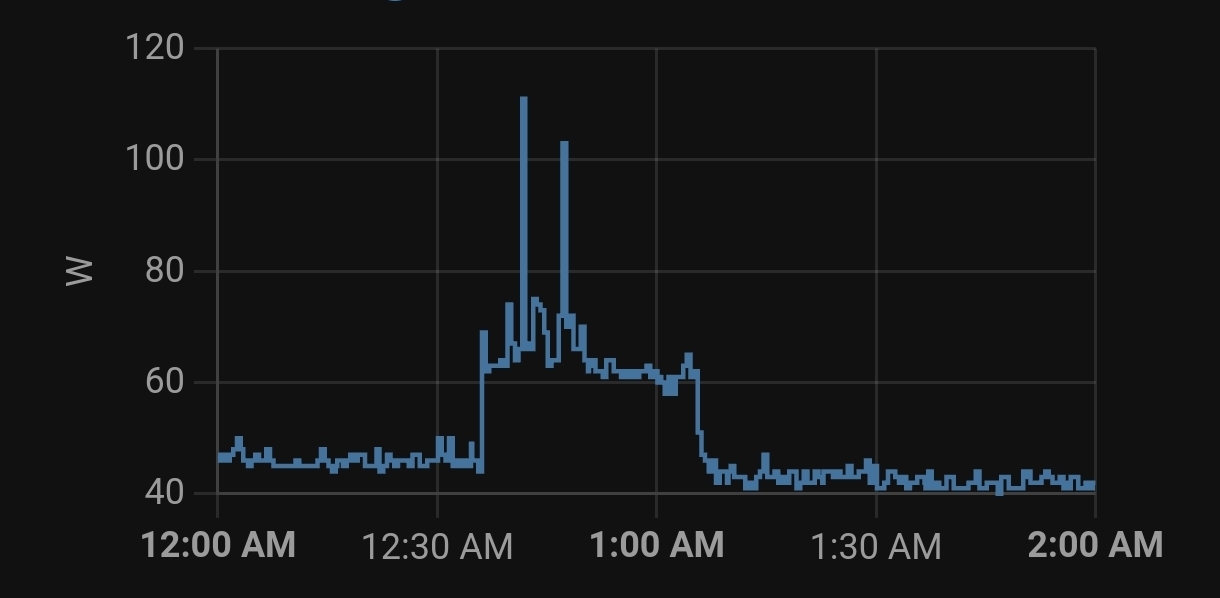

For comparison, I run a thinkstation p300 with i7-4790 (TDP 84W) 24/7 and the power usage looks like this:

Even when idling this old processor still guzzles 45W. Certainly not as nice as GP’s that only use 10W during idle.

Power scaling for these old CPU is not great though. Mine is slightly newer and on idle it still uses 50% of the TDP.

Xeon E5-2670, with 115W TDP, which means 2x115=230W for the processor alone. with 8 ram modules @ ~3W each, it’ll going to guzzle ~250W when under some loads, while screaming like a jet engine. Assuming $0.12/kwh, that’s $262.8 per year for electricity alone.

Would be great if you have an isolated server room to contain the noise and cheap electricity, but more modern workstation should use at least 1/4 of electricity or even less.

Google Reader was the best. Not sure why Google killed it, but it was really good at both content discovery and keeping up with sites you’re interested in. I tried several alternatives but nothing came close, so I gave up and hung out more on forums / link aggregators like slashdot, hacker news, reddit and now lemmy for content discovery. I’m also interested to hear what others use.

FBI would arrest jellyfin devs so fast before they can hit the release button.

Yes, but autossh will automatically try to reestablish connection when its down, which is perfect for servers behind cgnat that you can’t physically access. Basically setup and forget kind of app.

If this server is running Linux, you can use autossh to forward some ports in another server. In this example, they only use it to forward ssh port, but it can be used to forward any port you want: https://www.jeffgeerling.com/blog/2022/ssh-and-http-raspberry-pi-behind-cg-nat

By “remotely accessible”, do you mean remotely accessible to everyone or just you? If it’s just you, then you don’t need to setup a reverse proxy. You can use your router as a vpn gateway (assuming you have a static ip address) or you can use tailscale or zerotier.

If you want to make your services remotely accessible to everyone without using a vpn, then you’ll need to expose them to the world somehow. How to do that depends on whether you have a static ip address, or behind a CGNAT. If you have a static ip, you can route port 80 and 443 to your load balancer (e.g. nginx proxy manager), which works best if you have your own domain name so you can map each service to their own subdomain in the load balancer. If you’re behind a GCNAT, you’re going to need an external server/vps to route traffics to its port 80 and 443 into your home network, essentially granting you a static ip address.

I think you can send a SIGUSR1 signal to mumble process to tell it to reload the ssl certificate without actually restarting mumble’s process. You can use docker kill --signal="SIGUSR1" <container name or id>, but then you still need to give your user access to docker group. Maybe you can setup a monthly cron on root user to run that command every months?

Note that rsync.net includes free 7 days daily snapshot. Also, the main advantage over backblaze b2 for me is you can just sync a whole folder full of small files instead of compressing them into an archive first prior to uploading to a b2 bucket. This means you can access individual files later without the need to download the whole archive.

I still use b2 to store long term backup archives though.

Aye. Docker on linux doesn’t involve any virtualization layer. What should the direct the installation setup be called? Custom setup?

I’m currently using nextcloud:26-apache from here because some nextcloud apps I use is not compatible with v27 and v28 yet. The apache version is actually less hassle to use because nextcloud can generate .htaccess configuration dynamically by itself, unlike php-fpm version where you have to maintain your own nginx configuration. The php-fpm version is supposedly faster and scale better though, but chance that you won’t see that benefits unless your server handles a large amount of traffics.

People usually come here looking for advice on how to replace their dockerized nextcloud setup with a bare-metal setup. Now you came along presenting a solution to do the reverse! Bravo!

What do you guys think about putting the different components (webserver, php, redis, etc.) in separate containers like this, as compared to all in one?

I actually has a similar setup, but with nextcloud apache container instead of php-fpm, and in rke2 instead of docker compose.

Some distro actually do not map www-data user to UID 33, so if you’re on one of those distro, changing file owner to UID 33 won’t help you. Pretty sure Ubuntu use UID 33 though, but I’ve seen people on other distros getting bitten by this. Also, some container systems can remap file ownership when mounting a volume.

One thing to watch for is file permissions. Just make sure it’s all set to www-data and you’re golden.

I thought the white house actually runs their own mastodon instance when I read the headline. That would mark the point where mastodon reached mainstream use which is an incredible milestone.

I was excited for nothing…

Using apache is smoother for beginner though because nextcloud can configure some of the webserver configuration it needs by generating a .htaccess file by itself without user intervention. On nginx you might need to tweak the webserver configuration yourself every once in a while when you update nextcloud, which OP seems to hate to do.

I have to unsubscribe from some of kbin’s magazines because bots constantly posting spam there in past few months. It’s bad. I didn’t know the dev runs double duty as mod as well.